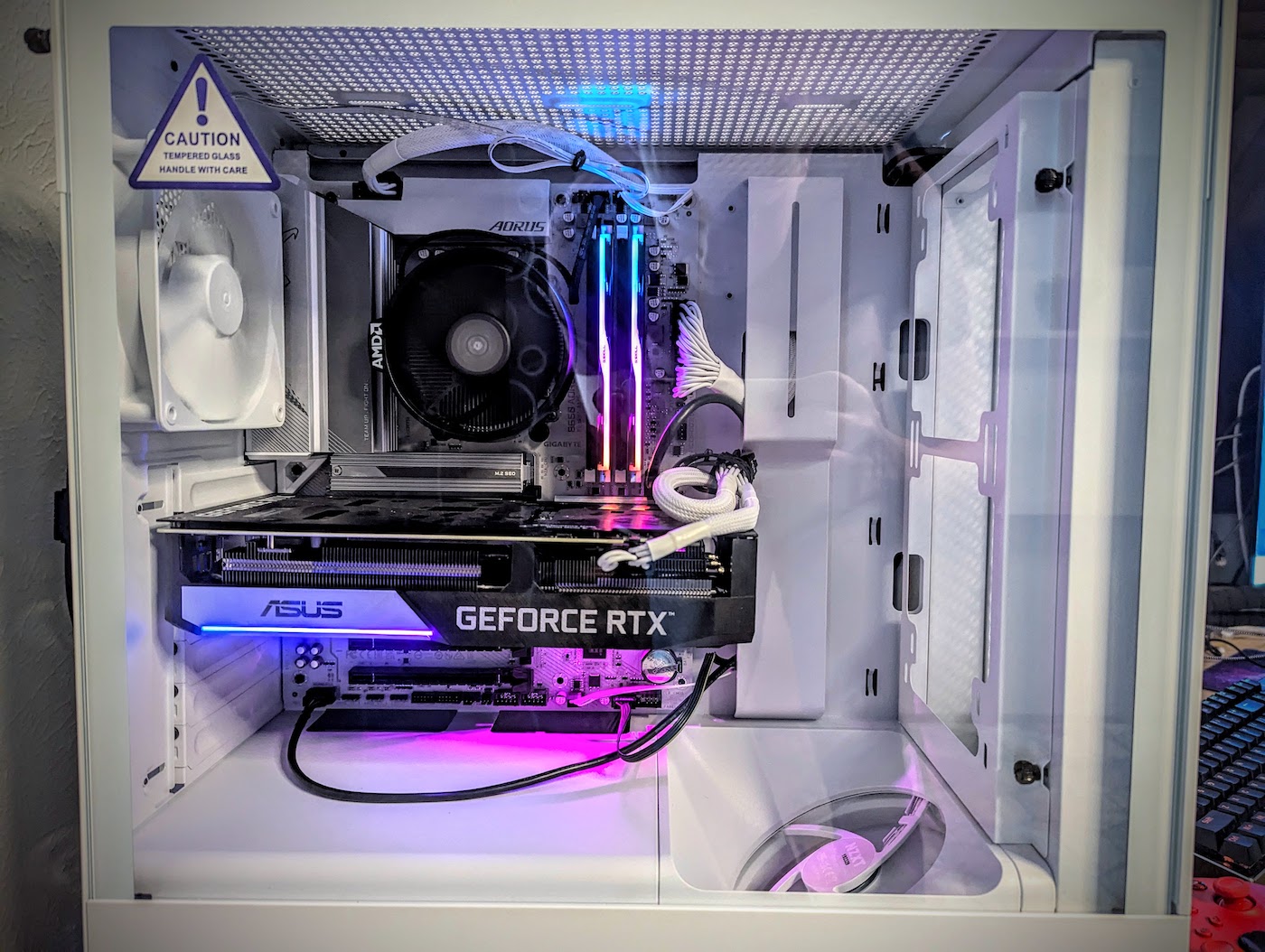

AMD and Intel want us to believe that the NPU is the future. There are currently two desktop options featuring NPU, AMD’s Ryzen 7 8700G and Ryzen 5 8600G. We built with the latter, the 6-core part. Technically, this is the first time we have been able to build a desktop with NPU. I guess we are pioneers?

Microsoft wants developers to adopt DirectML, but it does not yet support the AMD chip. We can’t see whether it’s being used by software, but Task Manager support is coming soon for AMD. We have our fingers crossed.

You can’t buy a pre-built desktop with NPU yet. Building one is technically the only way you can “experience” it in a desktop. We only know the types of tasks it might perform in the “near future,” according to AMD.

We tried Mistral using StudioML and managed to get it running on the NPU (we think) by telling it to run in OpenCL. The results during responses from the bot activated the CPU, but since Task Manager doesn’t show the NPU yet, it’s impossible to know for certain.

Should you build a system with NPU yet? Probably not, but if you want to build a system that might stay relevant for the next 5-7 years, AM5 could be your best bet today. If AM4 is any indicator, we could see support for 7 years. AMD has said it will support AM5 through at least 2025, but we still see new AM4 releases including 5700, 5700GT, and 5500GT. We have built systems with a number of AM4 chips including 2700, 3400G, 5700G, and 5700X. They still run well.

What is TOPS anyway? Apple says M3 can handle 18 TOPS while AMD’s 8600G and 8700G can achieve 16 TOPS. What does that mean for users? It’s difficult to say, but trillions certainly feels… productive. We don’t know how TOPS will translate to real-world performance. We don’t understand the potential bottlenecks yet.

Do you need the NPU now? Definitely not yet. We expect AMD, Intel, and Microsoft will tell us what it could specifically do in the future. For now, the capabilities are mostly hypothetical. Developers need to decide what they want it to do. Some types of tasks are better-suited for local because the latency is greatly reduced compared to cloud inference, but developers will ultimately decide and they will do it partly based on how these machines sell. Why develop for something essentially nobody has yet? It is probably difficult to justify this type of software development today.

What does this mean for NVidia? For CUDA-optimized code, nothing. NPUs will not run CUDA. But If users can run their fancy Ai without NVidia, that lowers the barrier to entry for this type of hardware capability dramatically. That might hurt NVidia in the consumer hardware space, but most of its revenue is from data center now anyway.

TL;DR: The NPU could democratize access to local Ai and allow us to run larger models than capable on current GPUs. It will make local inference of models less expensive in terms of both hardware costs and electricity. Better battery life.

Disclosure: Devin owns stock in NVidia and several of it’s GPUs.